Register Two Point Sets#

Index#

See also

registration; transformation; pointset

Synopsis#

Register two point sets with the Jensen-Havrda-Charvat-Tsallis point set metric.

Results#

It: 0 metric value: -0.0641355

It: 1 metric value: -0.0955508

It: 2 metric value: -0.0956365

It: 3 metric value: -0.0956648

It: 4 metric value: -0.0956742

It: 5 metric value: -0.0956774

It: 6 metric value: -0.0956784

It: 7 metric value: -0.0956788

It: 8 metric value: -0.0956789

It: 9 metric value: -0.0956789

numberOfIterations: 10

Moving-source final value: -0.0956789

Moving-source final position: [0.9999999481711049, 3.6956646020565954e-9,

-3.9958144654776e-8, 1.0000000021678688,

1.9991709056354183, 1.999169080146488]

Optimizer scales: [10000, 9998.46502472856,

10000, 9998.46502472848,

1.0000000000010232, 1.0000000000010232]

Optimizer learning rate: 164.938

Jupyter Notebook#

Code#

Python#

#!/usr/bin/env python3

import sys

from math import pi, sin, cos

import itk

# Generate two circles with a small offset

def make_circles(l_dimension: int = 2):

PointSetType = itk.PointSet[itk.F, l_dimension]

RADIUS = 100

offset = [2.0] * l_dimension

fixed_points = PointSetType.New()

moving_points = PointSetType.New()

fixed_points.Initialize()

moving_points.Initialize()

step = 0.1

for count in range(0, int(2 * pi / step) + 1):

theta = count * step

fixed_point = list()

fixed_point.append(RADIUS * cos(theta))

for dim in range(1, l_dimension):

fixed_point.append(RADIUS * sin(theta))

fixed_points.SetPoint(count, fixed_point)

moving_point = [fixed_point[dim] + offset[dim] for dim in range(0, l_dimension)]

moving_points.SetPoint(count, moving_point)

return fixed_points, moving_points

def test_registration(l_dimension: int = 2):

# Define test parameters

num_iterations = 10

passed = True

tolerance = 0.05

# Define types

PointSetType = itk.PointSet[itk.F, l_dimension]

AffineTransformType = itk.AffineTransform[itk.D, l_dimension]

PointSetMetricType = itk.JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4[

PointSetType

]

ShiftScalesType = itk.RegistrationParameterScalesFromPhysicalShift[

PointSetMetricType

]

OptimizerType = itk.RegularStepGradientDescentOptimizerv4[itk.D]

# Make point sets

fixed_set, moving_set = make_circles(l_dimension)

transform = AffineTransformType.New()

transform.SetIdentity()

metric = PointSetMetricType.New(

FixedPointSet=fixed_set,

MovingPointSet=moving_set,

PointSetSigma=1.0,

KernelSigma=10.0,

UseAnisotropicCovariances=False,

CovarianceKNeighborhood=5,

EvaluationKNeighborhood=10,

MovingTransform=transform,

Alpha=1.1,

)

metric.Initialize()

shift_scale_estimator = ShiftScalesType.New(

Metric=metric, VirtualDomainPointSet=metric.GetVirtualTransformedPointSet()

)

optimizer = OptimizerType.New(

Metric=metric,

NumberOfIterations=num_iterations,

ScalesEstimator=shift_scale_estimator,

MaximumStepSizeInPhysicalUnits=3.0,

MinimumConvergenceValue=0.0,

ConvergenceWindowSize=10,

)

def print_iteration():

print(

f"It: {optimizer.GetCurrentIteration()}"

f" metric value: {optimizer.GetCurrentMetricValue():.6f} "

)

optimizer.AddObserver(itk.IterationEvent(), print_iteration)

# Run optimization to align the point sets

optimizer.StartOptimization()

print(f"Number of iterations: {num_iterations}")

print(f"Moving-source final value: {optimizer.GetCurrentMetricValue()}")

print(f"Moving-source final position: {list(optimizer.GetCurrentPosition())}")

print(f"Optimizer scales: {list(optimizer.GetScales())}")

print(f"Optimizer learning rate: {optimizer.GetLearningRate()}")

# applying the resultant transform to moving points and verify result

print("Fixed\tMoving\tMovingTransformed\tFixedTransformed\tDiff")

moving_inverse = metric.GetMovingTransform().GetInverseTransform()

fixed_inverse = metric.GetFixedTransform().GetInverseTransform()

def print_point(vals: list) -> str:

return f'[{",".join(f"{x:.4f}" for x in vals)}]'

for n in range(0, metric.GetNumberOfComponents()):

transformed_moving_point = moving_inverse.TransformPoint(moving_set.GetPoint(n))

transformed_fixed_point = fixed_inverse.TransformPoint(fixed_set.GetPoint(n))

difference = [

transformed_moving_point[dim] - transformed_fixed_point[dim]

for dim in range(0, l_dimension)

]

print(

f"{print_point(fixed_set.GetPoint(n))}"

f"\t{print_point(moving_set.GetPoint(n))}"

f"\t{print_point(transformed_moving_point)}"

f"\t{print_point(transformed_fixed_point)}"

f"\t{print_point(difference)}"

)

if any(abs(difference[dim]) > tolerance for dim in range(0, l_dimension)):

passed = False

if not passed:

raise Exception("Transform outside of allowable tolerance")

else:

print("Transform is within allowable tolerance.")

if __name__ == "__main__":

if len(sys.argv) == 2:

dimension = int(sys.argv[1])

test_registration(dimension)

else:

test_registration()

C++#

#include "itkJensenHavrdaCharvatTsallisPointSetToPointSetMetricv4.h"

#include "itkGradientDescentOptimizerv4.h"

#include "itkTransform.h"

#include "itkAffineTransform.h"

#include "itkRegistrationParameterScalesFromPhysicalShift.h"

#include "itkCommand.h"

#include <fstream>

template <typename TFilter>

class itkJensenHavrdaCharvatTsallisPointSetMetricRegistrationTestCommandIterationUpdate : public itk::Command

{

public:

using Self = itkJensenHavrdaCharvatTsallisPointSetMetricRegistrationTestCommandIterationUpdate;

using Superclass = itk::Command;

using Pointer = itk::SmartPointer<Self>;

itkNewMacro(Self);

protected:

itkJensenHavrdaCharvatTsallisPointSetMetricRegistrationTestCommandIterationUpdate() = default;

public:

void

Execute(itk::Object * caller, const itk::EventObject & event) override

{

Execute((const itk::Object *)caller, event);

}

void

Execute(const itk::Object * object, const itk::EventObject & event) override

{

if (typeid(event) != typeid(itk::IterationEvent))

{

return;

}

const auto * optimizer = dynamic_cast<const TFilter *>(object);

if (!optimizer)

{

itkGenericExceptionMacro("Error dynamic_cast failed");

}

std::cout << "It: " << optimizer->GetCurrentIteration() << " metric value: " << optimizer->GetCurrentMetricValue();

std::cout << std::endl;

}

};

int

main(int argc, char * argv[])

{

constexpr unsigned int Dimension = 2;

unsigned int numberOfIterations = 10;

if (argc > 1)

{

numberOfIterations = std::stoi(argv[1]);

}

using PointSetType = itk::PointSet<unsigned char, Dimension>;

using PointType = PointSetType::PointType;

auto fixedPoints = PointSetType::New();

fixedPoints->Initialize();

auto movingPoints = PointSetType::New();

movingPoints->Initialize();

// two ellipses, one rotated slightly

/*

// Having trouble with these, as soon as there's a slight rotation added.

unsigned long count = 0;

for( float theta = 0; theta < 2.0 * itk::Math::pi; theta += 0.1 )

{

float radius = 100.0;

PointType fixedPoint;

fixedPoint[0] = 2 * radius * std::cos( theta );

fixedPoint[1] = radius * std::sin( theta );

fixedPoints->SetPoint( count, fixedPoint );

PointType movingPoint;

movingPoint[0] = 2 * radius * std::cos( theta + (0.02 * itk::Math::pi) ) + 2.0;

movingPoint[1] = radius * std::sin( theta + (0.02 * itk::Math::pi) ) + 2.0;

movingPoints->SetPoint( count, movingPoint );

count++;

}

*/

// two circles with a small offset

PointType offset;

for (unsigned int d = 0; d < Dimension; ++d)

{

offset[d] = 2.0;

}

unsigned long count = 0;

for (float theta = 0; theta < 2.0 * itk::Math::pi; theta += 0.1)

{

PointType fixedPoint;

float radius = 100.0;

fixedPoint[0] = radius * std::cos(theta);

fixedPoint[1] = radius * std::sin(theta);

if (Dimension > 2)

{

fixedPoint[2] = radius * std::sin(theta);

}

fixedPoints->SetPoint(count, fixedPoint);

PointType movingPoint;

movingPoint[0] = fixedPoint[0] + offset[0];

movingPoint[1] = fixedPoint[1] + offset[1];

if (Dimension > 2)

{

movingPoint[2] = fixedPoint[2] + offset[2];

}

movingPoints->SetPoint(count, movingPoint);

count++;

}

using AffineTransformType = itk::AffineTransform<double, Dimension>;

auto transform = AffineTransformType::New();

transform->SetIdentity();

// Instantiate the metric

using PointSetMetricType = itk::JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4<PointSetType>;

auto metric = PointSetMetricType::New();

metric->SetFixedPointSet(fixedPoints);

metric->SetMovingPointSet(movingPoints);

metric->SetPointSetSigma(1.0);

metric->SetKernelSigma(10.0);

metric->SetUseAnisotropicCovariances(false);

metric->SetCovarianceKNeighborhood(5);

metric->SetEvaluationKNeighborhood(10);

metric->SetMovingTransform(transform);

metric->SetAlpha(1.1);

metric->Initialize();

// scales estimator

using RegistrationParameterScalesFromShiftType =

itk::RegistrationParameterScalesFromPhysicalShift<PointSetMetricType>;

RegistrationParameterScalesFromShiftType::Pointer shiftScaleEstimator =

RegistrationParameterScalesFromShiftType::New();

shiftScaleEstimator->SetMetric(metric);

// needed with pointset metrics

shiftScaleEstimator->SetVirtualDomainPointSet(metric->GetVirtualTransformedPointSet());

// optimizer

using OptimizerType = itk::GradientDescentOptimizerv4;

auto optimizer = OptimizerType::New();

optimizer->SetMetric(metric);

optimizer->SetNumberOfIterations(numberOfIterations);

optimizer->SetScalesEstimator(shiftScaleEstimator);

optimizer->SetMaximumStepSizeInPhysicalUnits(3.0);

using CommandType = itkJensenHavrdaCharvatTsallisPointSetMetricRegistrationTestCommandIterationUpdate<OptimizerType>;

auto observer = CommandType::New();

optimizer->AddObserver(itk::IterationEvent(), observer);

optimizer->SetMinimumConvergenceValue(0.0);

optimizer->SetConvergenceWindowSize(10);

optimizer->StartOptimization();

std::cout << "numberOfIterations: " << numberOfIterations << std::endl;

std::cout << "Moving-source final value: " << optimizer->GetCurrentMetricValue() << std::endl;

std::cout << "Moving-source final position: " << optimizer->GetCurrentPosition() << std::endl;

std::cout << "Optimizer scales: " << optimizer->GetScales() << std::endl;

std::cout << "Optimizer learning rate: " << optimizer->GetLearningRate() << std::endl;

// applying the resultant transform to moving points and verify result

std::cout << "Fixed\tMoving\tMovingTransformed\tFixedTransformed\tDiff" << std::endl;

bool passed = true;

PointType::ValueType tolerance = 1e-2;

AffineTransformType::InverseTransformBasePointer movingInverse = metric->GetMovingTransform()->GetInverseTransform();

AffineTransformType::InverseTransformBasePointer fixedInverse = metric->GetFixedTransform()->GetInverseTransform();

for (unsigned int n = 0; n < metric->GetNumberOfComponents(); ++n)

{

// compare the points in virtual domain

PointType transformedMovingPoint = movingInverse->TransformPoint(movingPoints->GetPoint(n));

PointType transformedFixedPoint = fixedInverse->TransformPoint(fixedPoints->GetPoint(n));

PointType difference;

difference[0] = transformedMovingPoint[0] - transformedFixedPoint[0];

difference[1] = transformedMovingPoint[1] - transformedFixedPoint[1];

std::cout << fixedPoints->GetPoint(n) << "\t" << movingPoints->GetPoint(n) << "\t" << transformedMovingPoint << "\t"

<< transformedFixedPoint << "\t" << difference << std::endl;

if (fabs(difference[0]) > tolerance || fabs(difference[1]) > tolerance)

{

passed = false;

}

}

if (!passed)

{

std::cerr << "Results do not match truth within tolerance." << std::endl;

return EXIT_FAILURE;

}

return EXIT_SUCCESS;

}

Classes demonstrated#

-

template<typename TPointSet, class TInternalComputationValueType = double>

class JensenHavrdaCharvatTsallisPointSetToPointSetMetricv4 : public itk::PointSetToPointSetMetricv4<TPointSet, TPointSet, TInternalComputationValueType> Implementation of the Jensen Havrda Charvat Tsallis Point Set metric.

Given a specified transform and direction, this class calculates the value and derivative between a “fixed” and “moving” point set pair using the Havrda-Charvat-Tsallis entropy family, a generalization of the well-known Shannon entropy, and the Jensen divergence. Another way to look at the family of information-theoretic measures is that the points are used to construct the corresponding probably density functions.

In addition, we allow the user to invoke a manifold parzen windowing of the data. Instead of an isotropic Gaussian being associated with each point, we can actually calculate the covariance matrix for each point such that it reflects the locate point set structure.

To speed up the metric calculation, we use ITK’s K-d tree to query the metric value only for a given neighborhood. Considering that probably only a small subset of points is needed to get a good approximation of the metric value for a single point, this is probably warranted. So what we do is transform each point (with the specified transform) and construct the k-d tree from the transformed points.

Contributed by Nicholas J. Tustison, James C. Gee in the Insight Journal paper: https://www.insight-journal.org/browse/publication/317

N.J. Tustison, S. P. Awate, G. Song, T. S. Cook, and J. C. Gee. “Point set registration using Havrda-Charvat-Tsallis entropy measures” IEEE Transactions on Medical Imaging, 30(2):451-60, 2011.

- Note

The original work reported in Tustison et al. 2011 optionally employed a regularization term to prevent the moving point set(s) from coalescing to a single point location. However, within the registration framework, this term is of limited utility as such regularization is dictated by the transform and any explicit regularization terms. Also note that the published work applies to multiple points sets each of which could be considered “moving” but this is also not applicable for this particular implementation.

- REFERENCE

-

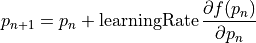

template<typename TInternalComputationValueType>

class GradientDescentOptimizerv4Template : public itk::GradientDescentOptimizerBasev4Template<TInternalComputationValueType> Gradient descent optimizer.

GradientDescentOptimizer implements a simple gradient descent optimizer. At each iteration the current position is updated according to

Optionally, the best metric value and matching parameters can be stored and retried via GetValue() and GetCurrentPosition(). See SetReturnBestParametersAndValue().

Gradient scales can be manually set or automatically estimated, as documented in the base class. The learing rate defaults to 1.0, and can be set in two ways: 1) manually, via

SetLearningRate(). Or, 2) automatically, either at each iteration or only at the first iteration, by assigning a ScalesEstimator via SetScalesEstimator(). When a ScalesEstimator is assigned, the optimizer is enabled by default to estimate learning rate only once, during the first iteration. This behavior can be changed via SetDoEstimateLearningRateAtEveryIteration() and SetDoEstimateLearningRateOnce(). For learning rate to be estimated at each iteration, the user must call SetDoEstimateLearningRateAtEveryIteration(true) and SetDoEstimateLearningRateOnce(false). When enabled, the optimizer computes learning rate(s) such that at each step, each voxel’s change in physical space will be less than m_MaximumStepSizeInPhysicalUnits.m_LearningRate = m_MaximumStepSizeInPhysicalUnits / m_ScalesEstimator->EstimateStepScale(scaledGradient)

where m_MaximumStepSizeInPhysicalUnits defaults to the voxel spacing returned by m_ScalesEstimator->EstimateMaximumStepSize() (which is typically 1 voxel), and can be set by the user via SetMaximumStepSizeInPhysicalUnits(). When SetDoEstimateLearningRateOnce is enabled, the voxel change may become being greater than m_MaximumStepSizeInPhysicalUnits in later iterations.

- Note

Unlike the previous version of GradientDescentOptimizer, this version does not have a “maximize/minimize” option to modify the effect of the metric derivative. The assigned metric is assumed to return a parameter derivative result that “improves” the optimization when added to the current parameters via the metric::UpdateTransformParameters method, after the optimizer applies scales and a learning rate.

Subclassed by itk::GradientDescentLineSearchOptimizerv4Template< TInternalComputationValueType >, itk::MultiGradientOptimizerv4Template< TInternalComputationValueType >, itk::QuasiNewtonOptimizerv4Template< TInternalComputationValueType >, itk::RegularStepGradientDescentOptimizerv4< TInternalComputationValueType >